The Skinner box

As you know, I’m not a big fan of social media, nor much of a user. This means I miss out on a lot, but that’s a topic for another day. I think it’s more than pure old age, “the world ain’t what it used to be”. It’s great that people can readily share their thoughts, feelings, ideas, triumphs, and challenges in a place where others can see them, and see those of others. The devil, as always, is in the implementation; or, to be more precise, the economics– how it’s paid for. Can we do better?

Someone close to me yesterday shared a thought that I’m going to share with you. Here it is:

“We have diverted our social instincts into, like, this weird Skinner box.”

This brilliantly captures for me one of the problems we’re facing, a place we’ve gotten ourselves to.

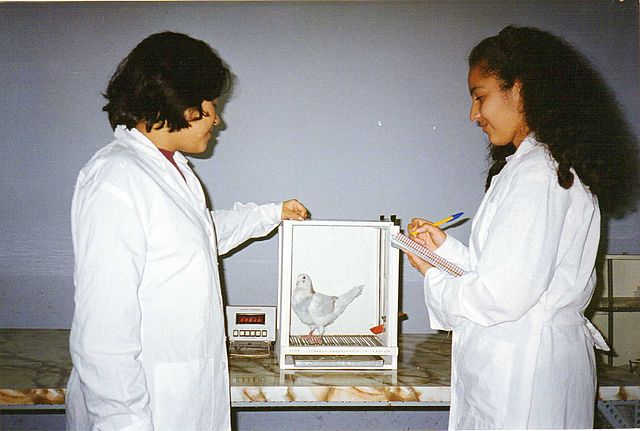

Do you know what a Skinner box is? It’s a device used famously by the psychologist B.F. Skinner to study the effects of reward and punishment on behavior, and learn to control the behavior of lab rats, pigeons, primates, and other creatures.

What does that have to do with social media?

Machine learning and AI training and feedback

The social media platforms are programmed with artificial intelligence and machine learning algorithms whose prime motivations are to:

- show you content that keeps you coming back

- show you ads that pay, due to relevance

The genius is that you and everybody else supply the content. All the platform has to do is:

- show you content that keeps you coming back

- show you ads that pay, due to relevance

So what’s the problem with showing you relevant content and advertising?

The problem is the reward – behavior feedback loop. The platform can be better at:

- showing you content that keeps you coming back

- showing you ads that pay, due to relevance

by classifying you and driving you toward tighter categories. It is no longer you training the platform, it is also the platform training you. That’s how it works like a Skinner box.

So it isn’t a wonder that our social interactions are becoming more and more polarized. Within the world of the platform AI, it’s a (possibly unintended) optimization of its goals.

It is a design for profit. We’re the lab rats. We’re the humans of “The Matrix”, in pods, with our brains attached to a virtual world, generating power for the robots. The power is mountains of cash to fuel the machines, pay the staff, generate unimaginable riches for some few individuals and, to a lesser extent, public share-holders. (I probably hold a mutual fund that holds shares in some or many of these companies.)

This platform world has ingratiated itself into, overlaps with, and now wields great power and influence in our real world. So our real world now has a component of direct influence and training never before realized. Posters, editorials, and leaflets as propaganda, move over. Now we use direct rewards and punishments to train (or distract) public opinion and behavior.

Keeping the baby

How do we rescue the good –being able to readily share our lives with others, and participate in others’ lives via our phones and computers– and vanquish the bad –fragmentation, polarization, exploitation?

The answer, paradoxically, is that the platform cannot be programmed to:

- show you content that keeps you coming back

- show you ads that pay, due to relevance

That being the case, it can’t be profit driven. It has to simply:

- show you content that you’ve explicitly expressed interest in seeing (such as people, organizations or tags that you “follow”)

- engage you solely by being useful

Part of the utility of these applications stems from there being other people using them. Getting others to use the platform and check-in is a trick, and once they’re there, with a critical mass, moving is tough. It has happened. Remember Myspace? Geocities? Prodigy? These platforms do evolve and replace one another.

The challenge

So I challenge you to develop replacement platforms that are funded by something other than profit, for the public good, that

- equal or exceed ease of sharing

- equal or exceed ubiquity of use

- do not track or centralize individual meta-data, such as who you are connected with and what you follow

- do not train or try to train you, but simply follow your expressed interests

It’s going to be tough given all of those little social media sharing icons all over the place that drive people back into the existing platforms. That doesn’t mean it’s impossible. First, the replacement has to be really nice to use.

Some references

One platform that has operated non-profit, that a whole world of people use, is Wikipedia. So there’s proof by example. Here’s a shout out to messaging application Signal messenger and the social networking platform, Mastodon

Here is a deeper dive around themes expressed here, Antisocial Media. It focuses on the largest of the big new media platforms.

I’d like to read some of the articles collected in The Dark Side of Media and Technology: A 21st Century Guide to Media and Technological Literacy but it’s a little pricey for me. There’s a chapter about The Dark Side of Ignoring the Terms of Service and Privacy Policies that is particularly interesting.

Photos from Wikimedia Commons

Credits: